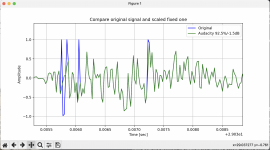

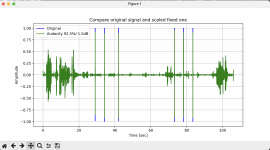

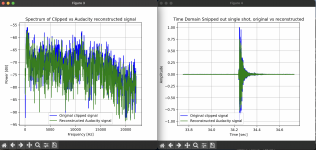

I did a rude and crude repair clipping exercise. I set the threshold of clipping to 92.5%, and reduced the amplitude by -1.5dB. I compared the unmodified file to the clip restored file, by plotting them on the same graph. I then chose a region outside of any clipping to find the amplitude difference. In my case I found a peak that measured 0.3646 in the original, and 0.3068 in the attenuated/clip restored file. Then I multiplied the attenuated file by that ratio (0.3646/0.3068) to make the amplitude the same. Then I plotted the waveforms on top of each other and zoomed in where they differ. What I find is the clip/restored + gain peaks are lower amplitude than the clipped peaks. This means that the peaks are being suppressed and not the true amplitude. We already know that clipped peaks are lower than the real signal (by definition). But now I see that at least using Audacity's algorithm, the peaks are not remotely accurate. I'm not surprised in the least, as Audacity is trying to make things sound better from an audio perspective - not to faithfully restore the signal to what it would have been.

The green is the altered and scaled waveform. It has nearly identical fit to the original, except where there was clipping. The green waveform is always less than the blue in the shot area. I don't know, but this doesn't seem like a faithful signal restoration. May sound good, but the algorithm is discounting high frequency energies. First attachment is a detail view, second is the big picture.

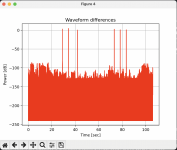

View attachment 429834View attachment 429835 Every shot looks similar. If you "do python" I can send you the file I used to process the data. I'm not doing sophisticated processing for this, but the graphing ability is similar to matlab, which makes it very handy. It didn't have much trouble plotting that 4677100 point graph, nor did it give me any trouble to zoom in or pan the waveform. If I take the difference between the waveforms, the peak error outside of the shots is 5e-5, which for our purposes is very small. But in the shot, the error is full scale for more than a couple of samples. Think we need to do better if our measurements are going to withstand scrutiny. Here is the difference in dB's since it shows it is very good EXCEPT for the impulses. The RMS value of the difference of the waveforms is 6.45, or 16.2 dB. I can assure you the -85dB errors are insufficient to add up, it's all in the very peaks we are trying to measure. Having that kind of error indicates to me that Audacity clipping restoration algorithm is not adequate for the kind of measurement we are pursuing.

View attachment 429853 What are your thoughts on this? Maybe I'm doing something really wrong? If so, tell me in a way so things can improve. It was fun to try this correction, and it works very well on non-impulsive sound, far better than I thought.